CPU, or Central Processing Unit, is the brain of digital computer and of the modern world. We are surrounded by CPUs, from our fridge, washing machine to our phone, TV and car. But nowadays CPU is no longer the only processing powerhouse of a computer with the rise of GPU. What does it make once only Graphics Processing Unit the staple of high performance computing?

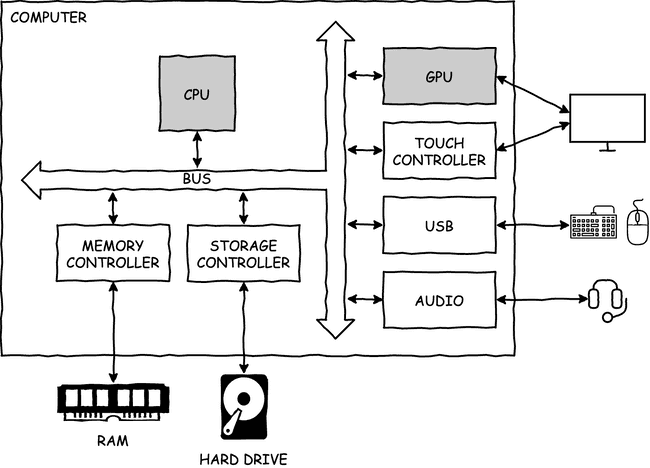

A typical computer

Essentially the design of the tiniest computers in our cars, advanced computer powering our smartphone and the supercomputer that does scientific modeling and forecast are all based on the same principle. It consists of:

- CPU: the brain that processes data and controls everything in the system.

- Memories: store data either temporarily (e.g. RAM) or permanently (e.g. hard drive, flash memory).

- Peripherals: interact with the user (e.g. monitor, keyboard), the environment (e.g. sensors, actuators) and other devices (e.g. wired and wireless connectivity).

- Bus: connects everything together.

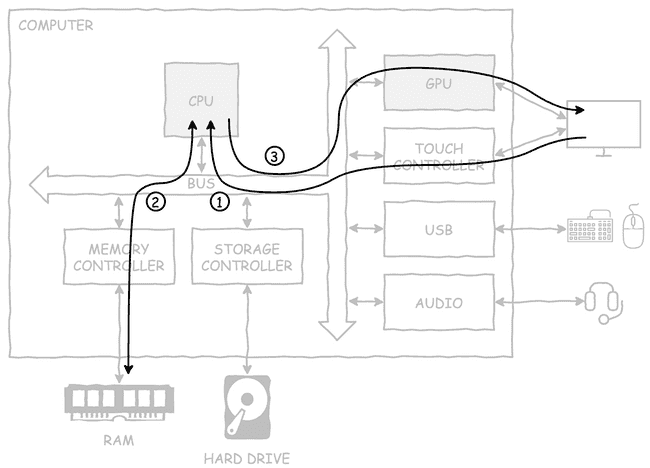

To demonstrate how the system works, especially the interaction between the CPU and GPU, imagine we touch a button on the smartphone screen:

-

The capacitive touch controller detects the change in the capacitance of the screen surface and works out the coordinate of the touch. It sends a message to the CPU to let it know of the event and consequently read the touch coordinate.

-

The app running on the phone analyzes the current user interface layout stored in RAM to find which object the user has pressed on. In this case the user is pressing on a button.

-

The app sends a list of draw commands to the GPU to redraw the button being pressed. The GPU executes these commands and writes the image to the display memory. The user now can see the image of the button being pressed on the screen.

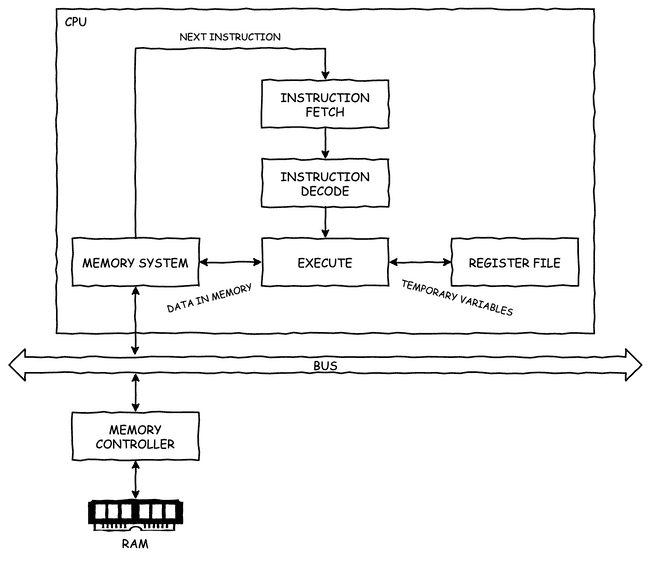

CPU - the general-purpose processor

CPU is the brain of digital computer. It fetches the program, i.e. the arbitrary sequence of instructions, from the memory and executes those instructions to achieve the intended goal. Because any task can be described as a finite sequence of instructions (such as the button rendering and touch handling example in the previous section), CPU is truly general purpose and can be used to solve any problem and run any application.

CPU design varies massively depending on the performance, energy efficiency and area requirements, but conceptually speaking there are 3 main stages:

-

Instruction fetch: reads the next instructions from the memory (can be temporary memory like RAM or persistent storage like flash memory).

-

Instruction decode: understands what the instruction does, which operands are needed and affected by the instruction.

-

Execute: performs the instruction intended functionality, for example arithmetic operations, memory access, branching, etc.

This naïve design is unfortunately hugely inefficient in practice. Because CPU is general purpose and there is no limit in programming capabilities, it needs to perform well on almost any kind of software, especially single-threaded workload. In order to achieve that, any decently performant CPU needs to have at least some forms of optimizations:

-

Instruction pipelining: Instead of fetching, decoding and executing one instruction, then fetching, decoding and executing the next instruction, and so on, CPU can execute the current instruction, but at the same time decodes the next instruction and fetches the next-next instruction.

-

Cache: RAM is relative slow compared to CPU (about hundreds of times slower) meaning the CPU would need to stay idle for most of the time waiting for data. Caching is a popular technique to provide small but much faster storage close to the CPU to reduce memory access latency.

-

Branch prediction: Branching such as

ifstatements makes the next instruction unknown until the branch instruction is executed. Branch prediction as its name suggests predicts whether the branch statement is true or false and fetches the next instruction speculatively. -

Prefetching: Cache memories are fast but normally small and cannot store everything needed. Prefetcher can predict the future memory access and fetch the data to the cache ahead of time and reduce latency penalty caused by memory access missing the cache.

-

Superscalar: If we want more performance, why don't we duplicate the pipeline (i.e. having 2 fetch units, 2 decoder and 2 execution engines). Superscalar makes multiple duplication of some or all stages of the pipeline to improve throughput.

-

Out-of-order execution: Instructions are fetched in bulk and in that reorder buffer if an instruction doesn't depend on any other, it will be dispatched and executed immediately regardless of order. Combining with superscalar, the CPU now can execute many instructions at the same time, improve parallelism and keep all the execution engines well-utilized.

These are only some of the most important optimization techniques and yet implementing all of them alone can take decades of engineering effort. They also make the design extremely complicated, occupy huge silicon area and have less energy efficiency, just so that it can perform best on almost any arbitrary workload. Ultimately the general purpose requirements of CPU is its hard limit on how far it can push on performance per watt ratio.

GPU for graphic

3D rendering is inherently computationally intensive. To render just one pixel on the screen, it needs to determine the corresponding light ray from the scene to the eyes view point, works out which object it belongs to and which surface in that object it is. Then it needs to calculate the color, which depends on many things such as light sources, surface material, properties of any transparent material between the object and the eyes (e.g. glasses windows, foggy outdoor, etc.).

When we take into account the fact that a normal scene in game can have thousands of objects, and a typical screen today has millions of pixels, being able to render the scene 60, 120, 240 times per second is virtually impossible without specialized hardware support.

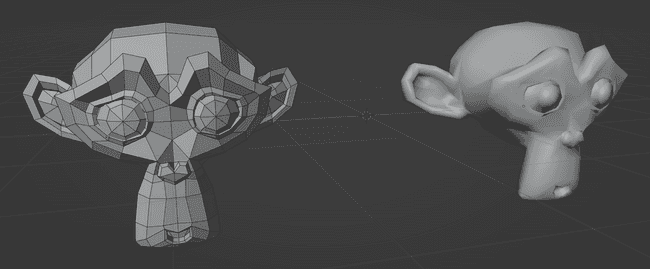

In 2D graphics, if we draw a regular polygon with high enough number of sides, it's indistinguishable from a circle. Similarly in 3D graphics, curved surface is represented by polygon mesh (like the 3D model of Suzanne monkey above). The finer the mesh is, the smoother the surface becomes, but also the more effort is needed to render them.

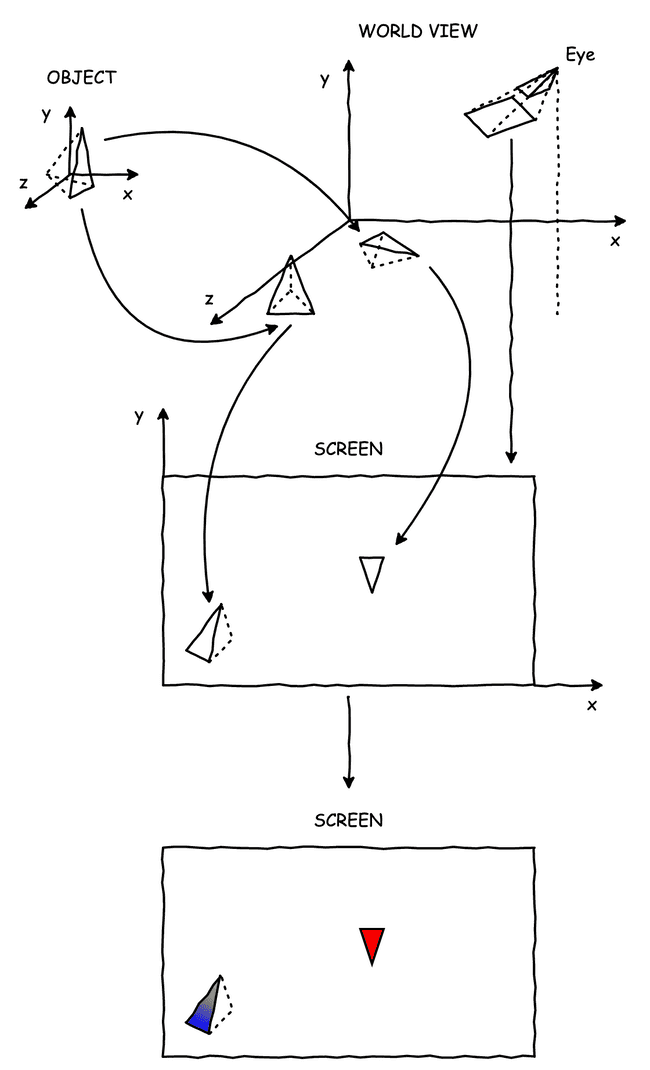

Graphic pipeline is quite complicated, so for simplicity let's assume a very simple graphic pipeline using triangle mesh and consisting of only 2 steps:

-

Vertex shading: transforms the vertices of all the triangles in the model to the screen coordinate.

-

Fragment shading: calculates some properties (e.g. color) for each pixel on the screen based on the list of triangles provided by vertex shading.

Why does graphic hardware for gaming use triangle rather than quad?

3D graphics for gaming doesn't use quad like the Suzanne monkey above - it instead works with triangle. Triangles have some advantages that make it much easier to deal with hence more performant:

- Any polygon can be subdivided into triangles trivially. Even quad can be divided into two triangles.

- Triangle is always convex - The order of vertices doesn't change the shape of triangles.

- Triangle is always in a flat plane.

Normally the same object, e.g. a tree, can be present in many places in the scene, but might be different in location, rotation, and sizes. In order to render it, we need to transform each instance of the model to the screen coordinate. Fortunately since each vertex coordinate can be seen as a vector, all the transformation e.g. rotating, scaling, perspective projection, etc. can be done simply using matrix multiplication. If an object has 1000 triangles, all the vertices can form a large matrix and the transformation of them can be done all at once.

Fragment shading can vary massively depending on what kind of rendering we're doing, but it normally performs some forms of element-wise operations to calculate the value inside each triangle. For example if the surface of the object has a texture image, the fragment shader should be able to "paint" the surface with the texture.

GPU for compute

Advancement in GPU has been largely driven by the ever increasing demand for realistic gaming graphic. But as we can see from the previous section, somehow the art of drawing eye-catching things turns out to rely heavily on performing repetitive math on huge number of triangles. Many applications, most notably deep learning, scientific computing, etc. have successfully harness the incredible computational power of GPU to accelerate compute workload simply because the gaming industry happens to do things like matrix multiplication, dot product and element-wise operations as well.

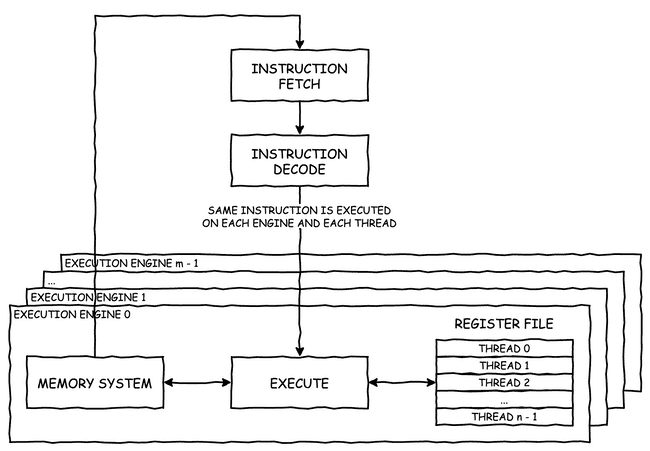

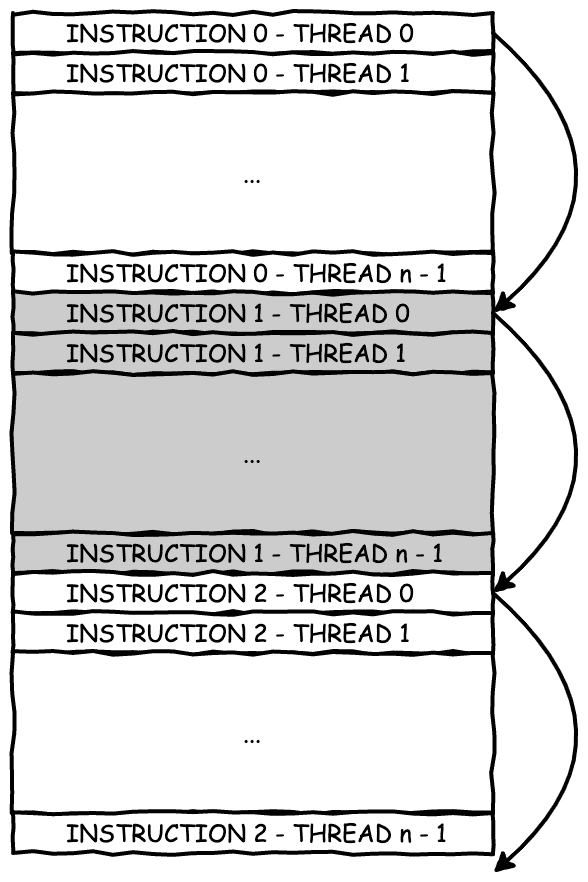

Since GPU workload is highly parallel already, the key idea of GPU design is to run the same instruction on different data. For example vertex shading can be performed the exact same way for all objects on the scene - the only difference is the model and the transformation matrix. Conceptually speaking a GPU core can be designed using now-familiar CPU stages as bellow:

Instead of trying to reorder and parallelize the sequence of instructions like a CPU core does, a GPU execution engine switches between threads (i.e. switches between different data) incredibly fast so that the execution engine is kept busy all the time. The main advantage of interleaving multiple thread is that the latency of each instruction does not matter any more. Given that each execution engine can interleave thousands of threads, the "distance" between each instruction is far enough it hides all pipeline stage dependencies and the memory access latency.

Caching is no longer vital (in practice it's still and increasingly important for different reason) as memory access latency doesn't affect performance, so does prefetching. Branch prediction is not needed because the previous instruction has totally completed before the next one is executed. Out-of-order execution doesn't matter because all the execution engines are busy all the time already. Simply put, GPU core doesn't need most of the complicated optimization from CPU yet can achieve extremely high utilization.

The parallel and predictable nature of GPU workload lets its design be very simple and scale massively better. One GPU core can handle thousands of threads (as opposed to normally one or two threads in case of CPU core). Simpler design also means less silicon area (meaning more cores can be put in the same design) and better energy efficiency (easier to cool hence more cores can be put in the same design as well).

CPU vs. GPU

All the explanation above certainly gives an impression that CPU vs. GPU is not exactly a clear cut. Each has its own history, purpose and hence optimization objectives.

The simple rule is, if the workload is parallel by nature (as an analogy, if the data flows like a stream of water) and it is large, GPU is much faster. Otherwise, stick with CPU - it can run any program, it's extremely optimized and can perform well regardless of what the workload is trying to do.